- Google has introduced AI-based models to improve the quality of low-resolution images.

- The two diffusion models can generate high fidelity images.

- These models have plenty of applications, including the improvement of medical imaging systems.

Google has released AI-based image upscaling technology that is said to enhance the quality of low-resolution images. In a post on Google’s AI blog, the researchers from Brain Team introduced two diffusion models to generate high fidelity images. The two models are image super-resolution (SR3) and cascaded diffusion models (CDM).

What is Image Super-Resolution?

First of this model is the image Super-Resolution via Repeated Refinement or SR3. This method is defined by the research team as “a super-resolution diffusion model that takes as input a low-resolution image, and builds a corresponding high-resolution image from pure noise.”

To implement it, the machine uses a process of image corruption where noise is consistently added to a high-resolution image until only pure noise remains. It then reverses the process that removes the noise and reaches a target distribution “through the guidance of the input low-resolution image.”

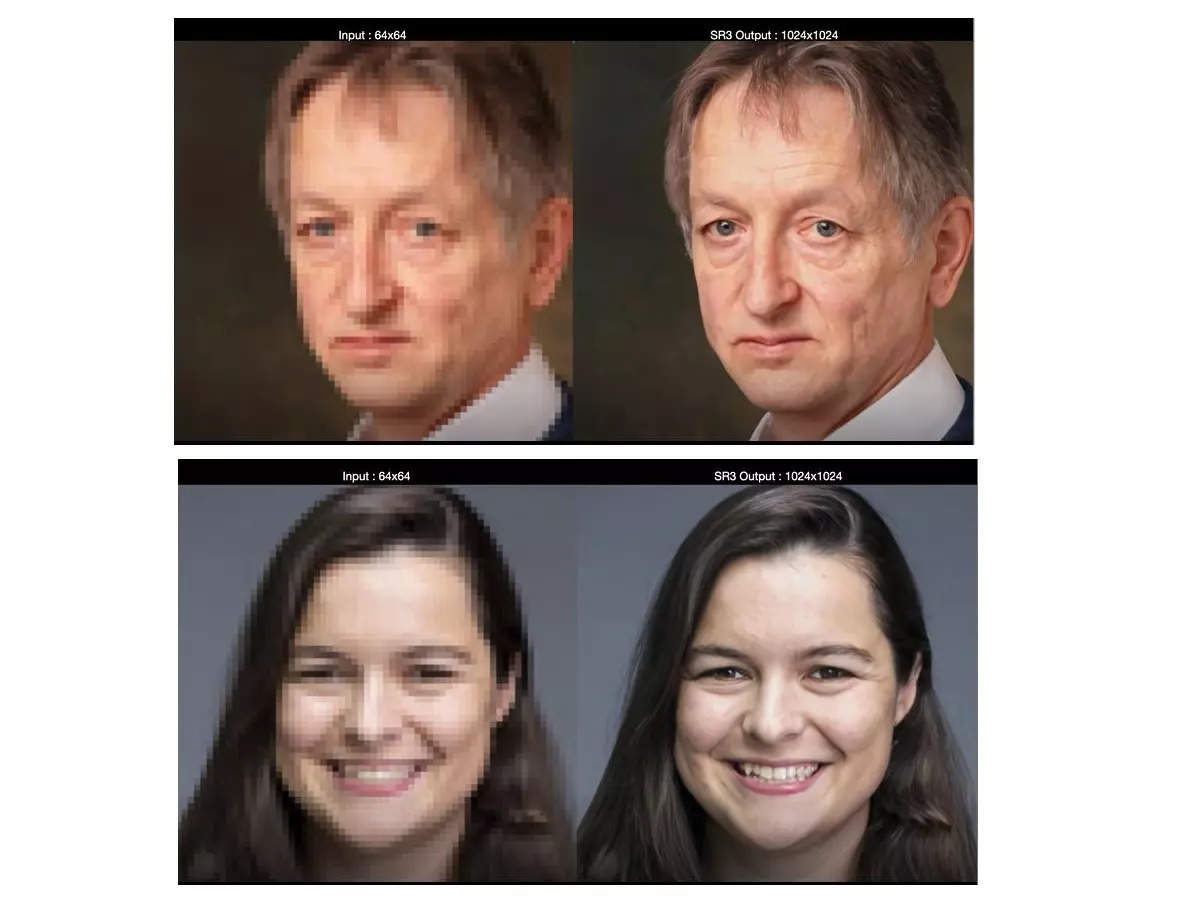

Super Resolution resultsGoogle

Some of the results shown by Google’s research team are impressive and showcases how this method can be used to effectively improve the image quality of low-resolution images. As per the post, the super-resolution can have multiple applications that include enhancing the existing medical imaging systems and restoring old family portraits.

Cascaded Diffusion Models (CDM)

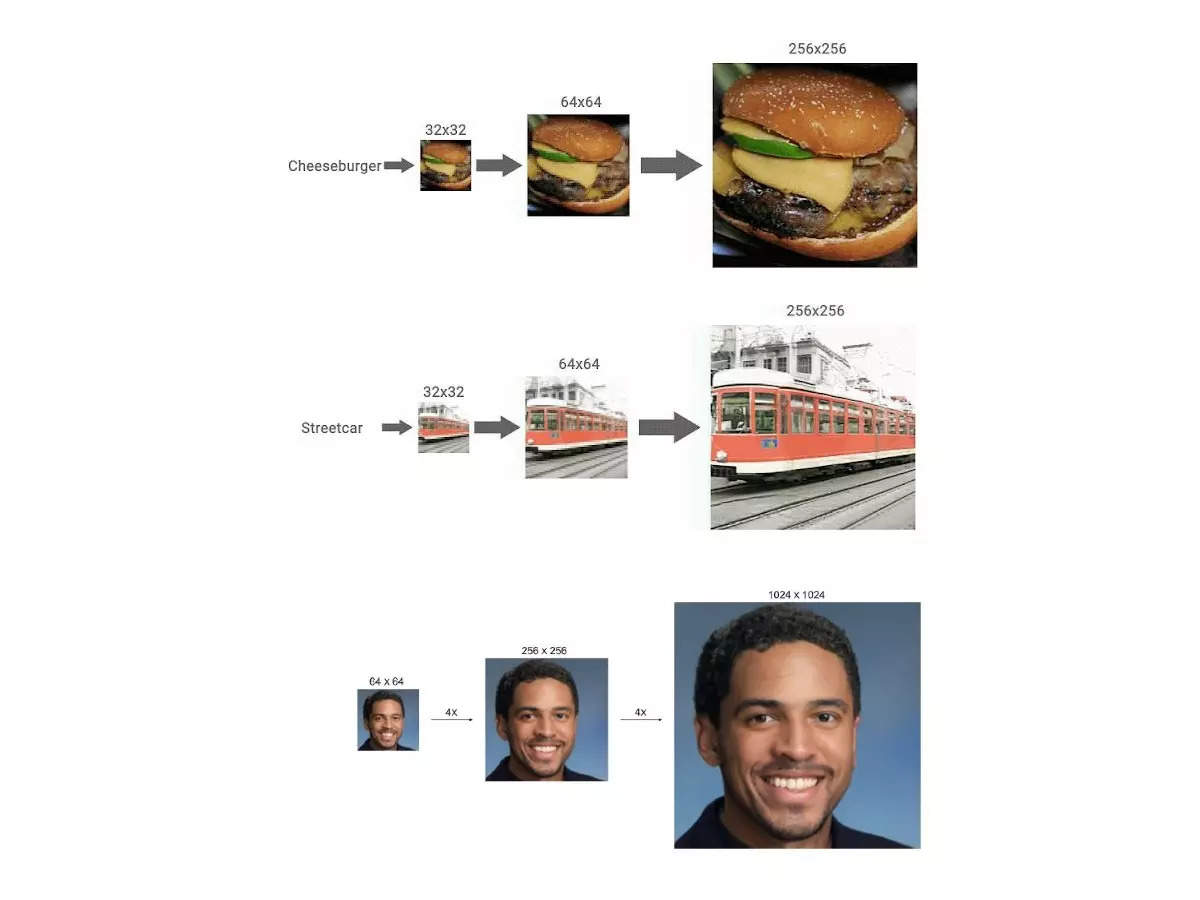

Once the SR3 model had shown effectiveness, the Brain Team used the model for class-conditional image generation. CDM is explained by the researchers as “ a class-conditional diffusion model trained on ImageNet data to generate high-resolution natural images.”

As per the post, Google built CDM as “a cascade of multiple diffusion models” since ImageNet was a difficult, high-entropy dataset. The model is a combination of multiple diffusion models that can generate images of increasing resolution. It starts with a standard diffusion model at the lowest resolution and is followed by a sequence of super-resolution models that can successively upscale the image and add higher resolution details.

Along with SR3, Google also uses a new data augmentation technique, called “conditioning augmentation”, that is said to further improve the sample quality results of CDM.

Example of cascading modeGoogle

Using the CDM method, a low-resolution image of 64×64 can be diffused to 264×264 resolution and then further to 1024×1024.

With the introduction of these models, Google is looking to improve the natural image synthesis that has wide-ranging applications but poses design challenges. “With SR3 and CDM, we have pushed the performance of diffusion models to the state-of-the-art on super-resolution and class-conditional ImageNet generation benchmarks,” the researchers wrote in the blog post.